I recently took a survey sponsored by the Hertz Corporation, intended to assess the appeal of several new approaches to services. This post discusses some of the problems I found, and why you should avoid creating your surveys like this one.

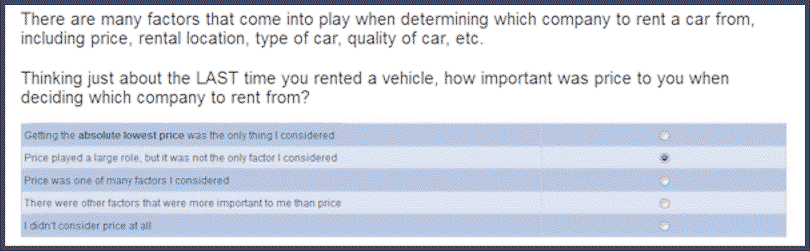

After asking about the number of times I had rented a vehicle, for what purpose, what type, and from which company, they asked how important price was to me when deciding which company to rent from the last time.

This is a pretty good question.

Now they have an anchor for later questions about price. They know how important price was to me for this last rental. Note that the importance of price (or any other factor come to that) is situational, certainly for me. The last car I rented prior to taking the survey was for a vacation on Maui for a family wedding. I didn’t want a fancy car, or at least I didn’t feel like justify one for this trip – the wedding and all the fun things to do on Maui were going to be pretty expensive, and I expected to be driving on some substandard roads. I needed something flexible enough to take other members of the party – I expected people to be juggling activities. So I chose a four-door.

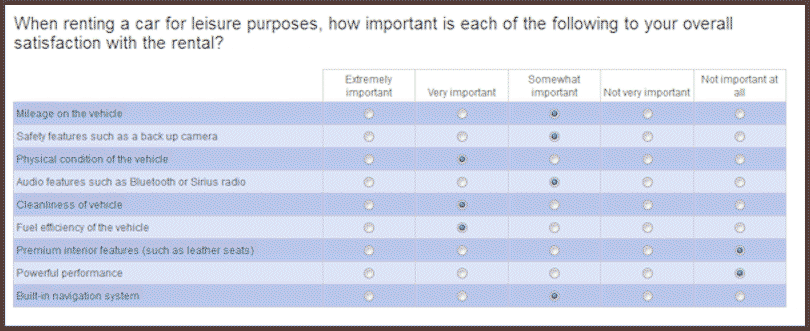

The next section of the survey covered the importance of various features, the mileage, and the condition of the vehicle. One question asked about the importance of general areas such as performance, cleanliness, audio features, fuel efficiency.

Most of the question options made sense to me, but mileage on the vehicle doesn’t seem like something that should be included in the list. From my perspective, the mileage is going to relate to the physical condition and the cleanliness of the vehicle. When I pick up the rental car the current mileage is just a minor item, and perhaps something to note for the contract, especially if there is a mileage limit on my rental. Perhaps I should be paying more attention, but I don’t remember ever thinking about it as I signed a contract. Perhaps somebody pointed out that this was a low mileage vehicle – I don’t remember. I guess I always expect a fairly low mileage on a rental car, especially from one of the major companies. Anyway, I wasn’t too surprised when I saw the first question that included the importance of mileage, although it struck me as a little odd in the same way I’m describing here. The next question asked me to rank the top three options of the nine that were provided previously; this still made sense.

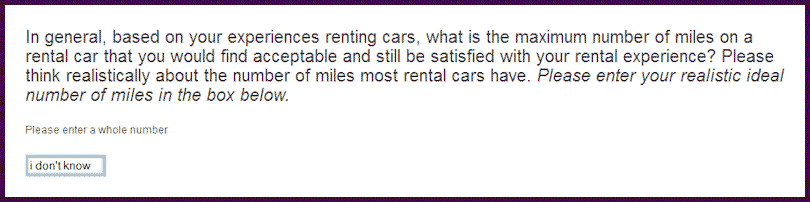

Things went downhill from here. The next question asked the maximum number of miles of a rental car that I would find acceptable and still be satisfied. Here’s the question:

Puzzled as I was by the notion that I could come up with an answer (note that the question text stresses “realistic”), I tried to enter “I don’t know” into the box in the forlorn hope that it would be accepted, despite the fact that the instructions read – please enter a whole number. My attempt generated an error message, repeating the directive, this time in bold red – Please specify a whole number. There was also a red warning at the top of the screen telling me that I needed to follow the instructions. These validation messages weren’t too surprising, but I was disappointed to find that I couldn’t indicate my real feelings. I next tried to enter “0” into the box. This generated a different error message – Sorry, but the value must be at least 100. I think this was the point at which I realized that this survey was going to provide material for an article. Expecting further fun and games, I decided not to waste too much more time on this one question. I entered 10,000 and was allowed to proceed to the next question.

Lesson 1. What’s the worst thing about this little battle with the question? The data they got from me is rubbish. If enough other people are equally uncaring, or like me have no realistic idea of the mileage that would be satisfactory, Hertz is making decisions on a very shaky foundation. Read on, it gets worse.

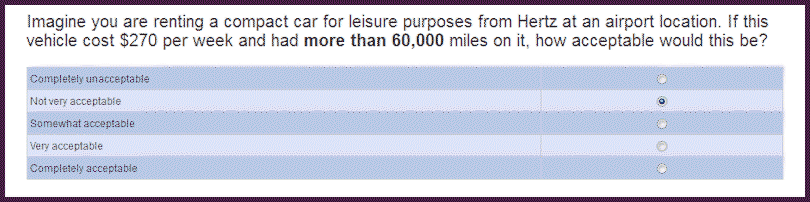

The introduction to the next section describes what I’ll see next – “…several scenarios – understanding your opinion when renting a vehicle. Please think about what makes a car rental experience enjoyable.” This sounded pretty good, but it turned out that they just wanted to torture me on the mileage issue in a different way.

“Uh oh”, went through my mind. “I wonder how they’ll play this out? Are we going to be negotiating on the mileage?” Yes that’s exactly what happened. I responded “Not very acceptable” to the first round of this question. I don’t really know whether it’s acceptable or not, but I’m pretty sure Hertz wants me to believe that it isn’t. I was actually hoping that I would just get a single question on the subject, but that’s not how it worked. The next question was exactly the same wording except that “had more than 60,000 miles on it” was replaced by “had 50,000 miles on it”. There was an additional instruction too – Please note that the question remains the same, but the NUMBER OF MILES ABOVE has changed. Isn’t there some form of torture based on telling the victim what’s going to happen? But now I’m curious and I want to see how long this can go on. 60,000, 50,000, 40,000, 35,000, 25,000, 20,000, 15,000, 10,000. I answered “Not very acceptable” every time. At 5,000 – yes, that’s the ninth repeat – I chose “Somewhat acceptable” and was allowed to move on to the next torture chamber.

Lesson 2. Why doesn’t this repetitive approach work? For one thing, it’s boring. Even if someone has a realistic idea of a good number (perhaps a rental car should be similarly low mileage to the vehicle at home that’s replaced regularly), they still have to go through the performance to reach the acceptable number. And it’s a negotiation – “how low mileage can I get for the same $270?” This is where annoyance and fatigue is going to build up. Bad data, increased likelihood of dropping out, reducing the likelihood of achieving representative results.

Idiosyncratically,

Mike Pritchard